Functionally thinking

Let’s refresh our minds with some concepts you learned in high school and may have forgotten.

In Mathematics, we have something cool that is used to play with numbers. Given one, we operate over it and return another.

For instance, we could input the number 100 and receive 103.5 back.

Is even allowed to use more than one number in the input. We will use this pair of numbers, transform then the way we want and return the result. Since it’s just a matter of transformation, inputting the same values to this bubble will always output the same result. We call this bubble “function”.

Function is a basic concept when considering the origin of computers. These, created to give faster results for problems hard or slow to solve manually. If you had the chance to decrypt a message between two allies in a war, reading the original message fast were a matter of survival, helping you to prepare your army before enemy’s attack. You input some apparently random characters to the decryption function — which will be responsible to transform it — and receive back a readable message. The exact kind of function you learned in school, but with letters in the place of numbers.

No Pain, No Gain

No, it isn’t easy to learn anything on Mathematics. But there is a reason for this.

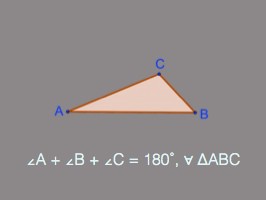

∠A + ∠B + ∠C = 180˚, ∀ ΔABC

Math aims to define concepts and use them to build larger structures. How about the above statement? Do you understand it? Translated to English, it’s something like “For all triangles ABC, the sum of angles A, B and C is equal to 180 degrees”.

Should be already enough to get the idea, but to get even more from this translated definition, you must know what “triangle”, “angle” and “degree” is and how these concepts relate to each other.

To better understand the large, you should know about concepts used in its construction. You end up getting a lot of information with a less words.

λ calculus (or just Lambda calculus if you don’t want to sound cool)

In 1924 and 1930, we had a major work made by two mathematicians toward the goal of making functions more powerful than ever. Moses Schönfinkel was the first, answering the question that, yes, we can create functions that depend lesser and lesser of external variables. This motivated Haskell Curry in his study to show that even functions with multiple arguments could be made combining single argument functions.

In 1936, Alonzo Church was the responsible of creating the whole new field called Lambda calculus, a notation to define and apply operations using just functions. He proved that every numeric function can be expressed by lambda-terms, or as you might know today by just “lambda”. Think about the following function:

λx. x + 3

This is the notation developed to express functions in this field. In the example, it defines a function receiving one argument — x — and, when evaluated, returns it summed with 3.

But I mentioned he proved we can express ANY numeric function. Which means we can include addition, multiplication, loops and even conditionals. Together with another name you may know better, Alan Turing, he showed that Lambda calculus can accomplish the same computations of a Turing machine. Every set of operations you make in any computer language can be expressed by functions. Both returning the same value.

Relation with computation #1: functions are immutable

In Mathematics, functions are always immutable.

gross_amount(price) = price * 1.03

gross_amount(100) => 103

When we defined this function “gross_amount”, we also defined the relation between the numbers and how we can get to the result. Also in computation.

gross_amount(100) => 103

gross_amount(100) => 103

We can run this function as many times as we want. Nothing will change its result. Calling it with 100 must always return 103, since 100 * 1.03 will always be 103. Wait 30 minutes and try again. 103. Multiplication didn’t changed its rules, so same result.

Even functions made to generate randomic numbers are immutable. To understand how, watch this Numberphile video, because you will understand way better than any explanation I would try to do.

x(y) = 10 * 4

x(10) = 100

x(12) = 120

x(3001.23) = 30012.3

If these functions will always return the same result when called with the same parameters, the computer can remember the last time you called and give the result to you. This is called “Memoization”.

But Irio, I can’t memoize all of my functions.

def store_contributions

File.write(“#{Time.now.to_i}”_contributions”, contributions)

end

You are cheating. This is a procedure, not a function. It performs an IO operation, so yes, must be run in a different way.

Relation with computation #2: expressions does not have order

x(y) = 10 * y

y = 7

z(x) = 5 * x + 2

Think about this system of equations. If I want to know z(5), does it matter if I calculate first x, y or z? The result will always be 352, right?

numberOfProjects = 0

contribution = 1000

contributedToEachProject = contribution / numberOfProjects

puts contributedToEachProject

In this case, I don’t need to calculate contributedToEachProject until reaching the 4th line. No one is using, so why should I care? I just to remember that know how to calculate numberOfProjects, contribution and contributedToEachProject. When I ask for contributedToEachProject to do something really useful (printing in the screen), I go to its definition. I will need the contribution value, so I get it. The order of evaluation could be lines 4, 3, 2, 1 without any problem. What means this? I could run all of the three first lines in parallel, using multiple cores of my processor.

Since I don’t evalute expressions until being really needed, we’re going to call this “Lazy evaluation”.

But Irio, sometimes we do need order. I don’t want to detonate a missile and then launch it. For sure.

a_collection.insert(some_value)

a_collection.remove(42)

If the first line run after the second, we might not have the expected result. I could be adding 42 to a_collection, the 42 wouldn’t be removed as we would expect. The problem is given the shared variable, a_collection.

-- Haskell

a_collection -: insert some_value -: remove 42

# Elixir

a_collection.insert(some_value) |>

a_collection.remove(42)

Languages like Haskell and Elixir have ways to get around this and define order of operations. One way available is named “Monads” and is and abstraction used in Haskell implementation, for instance.

Paradise #NOT

Unfortunately, programming following these concepts in languages that support them well — called functional languages — won’t be (always) faster than doing your procedural code. Existing commercial processors essentially follow the Turing machine, so asking the processor to do exactly what will end up doing, most of the time, will bring faster results.

Sometimes, like when taking advantage of memoization, will beat times of imperative. But we started using abstrations like Object-oriented because maintaing pure imperative code wasn’t always the best option. With functional programming, we have another paradigm, focused on defining the solution of problems (grounded in its Mathematics’ foundations). We start saying a lot with less and using more the current multiple core processors we already have, making sure we learned what was presented to us more than 70 years ago.

Appendice

Do you want to learn more about Lambda calculus? Study this theoric introduction by Stuart A. Kurtz.

Do you want to learn more about Haskell? Read Learn You a Haskell.

Do you want to learn more about Elixir? The Pragmatic Bookshelf has something for you.

Do you want to learn more about how Mathematics can be applied in software development? Follow me!

Originally published at irio.tumblr.com.